2026.1.5

Why We Built VIBE Bench: Rethinking Evaluation for Real Workloads

A full-stack application benchmark focused on real user experience

VIBE Bench: A Full-Stack Application Evaluation Benchmark for Real User Experience

To measure a model’s full-stack capability to build complete, runnable applications from zero to one, MiniMax introduces a new benchmark: VIBE (Visual & Interactive Benchmark for Execution).

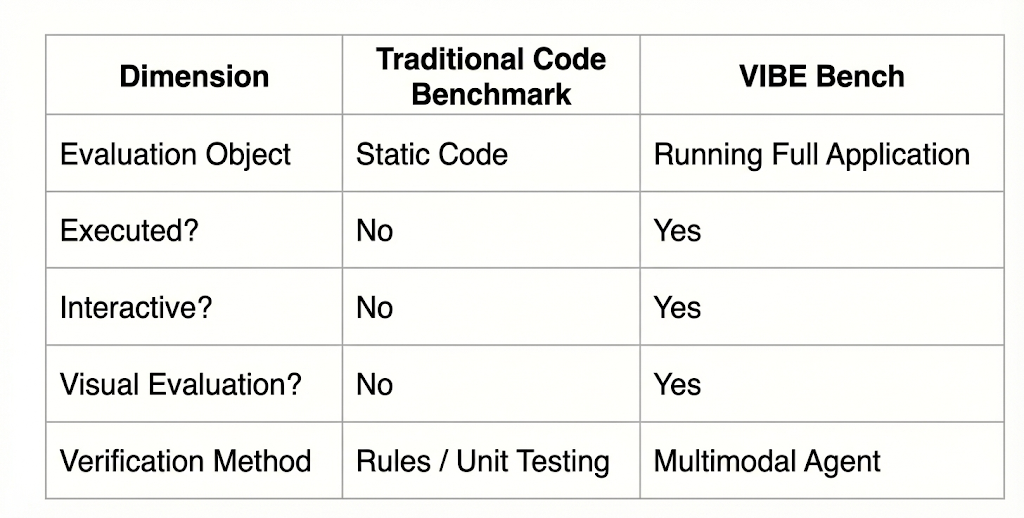

Unlike traditional benchmarks, VIBE automatically evaluates the interaction logic and visual presentation of generated applications in a real execution environment, providing a more faithful assessment of real user experience.

Unlike traditional benchmarks, VIBE automatically evaluates the interaction logic and visual presentation of generated applications in a real execution environment, providing a more faithful assessment of real user experience.

Background & Motivation

When we evaluate large language models today, most widely used benchmarks, such as SWE-bench and Terminal-bench, focus on static code correctness or command-line–level task completion.

These benchmarks have been extremely valuable for measuring coding ability. But they also rely on an implicit assumption:

If the generated code is logically correct and passes tests, the task is considered complete.

These benchmarks have been extremely valuable for measuring coding ability. But they also rely on an implicit assumption:

If the generated code is logically correct and passes tests, the task is considered complete.

In real-world usage, that assumption often falls short.

What users actually care about is whether:

What users actually care about is whether:

- the application can be successfully built and launched;

- core features work through real user interaction;

- interactions behave as expected;

- the interface looks modern, polished, and professional.

Despite this gap, there has been no benchmark that systematically evaluates whether model-generated applications truly deliver a usable end-to-end experience.

This is the motivation behind VIBE (Visual & Interactive Benchmark), a full-stack evaluation benchmark designed around real user experience.

This is the motivation behind VIBE (Visual & Interactive Benchmark), a full-stack evaluation benchmark designed around real user experience.

VIBE Bench Overview

VIBE is built to evaluate the entire lifecycle of an application, focusing on how model-generated apps perform in real execution environments.

Unlike existing benchmarks that mainly target Web or backend development,VIBE deliberately includes several critical but often overlooked technical domains, such as:

Unlike existing benchmarks that mainly target Web or backend development,VIBE deliberately includes several critical but often overlooked technical domains, such as:

- Native Android development (Kotlin / Java)

- Native iOS development (Swift / Objective-C)

- High-fidelity Scientific Simulations, where both precise computation and realistic rendering matter

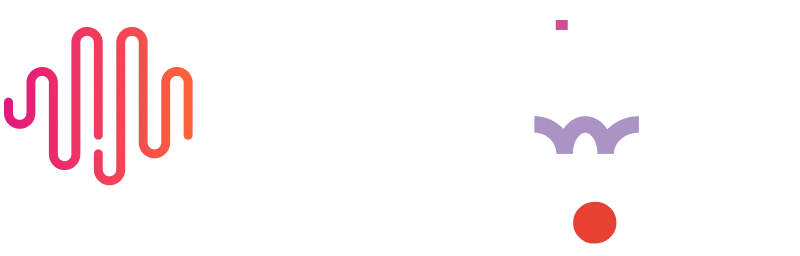

To reflect the diversity of real-world development, the VIBE dataset is organized into the following subsets by technology stack:

- Web: Frontend applications that demand strong visual design and complex DOM interactions

- Simulation: Scientific simulation tasks requiring high-fidelity rendering and accurate numerical computation

- Android: Native Android application development (Kotlin / Java)

- iOS: Native iOS application development (Swift / Objective-C)

- Backend: Server-side systems that emphasize API completeness and overall system architecture

Core Approach: Agent-as-a-Verifier (AaaV)

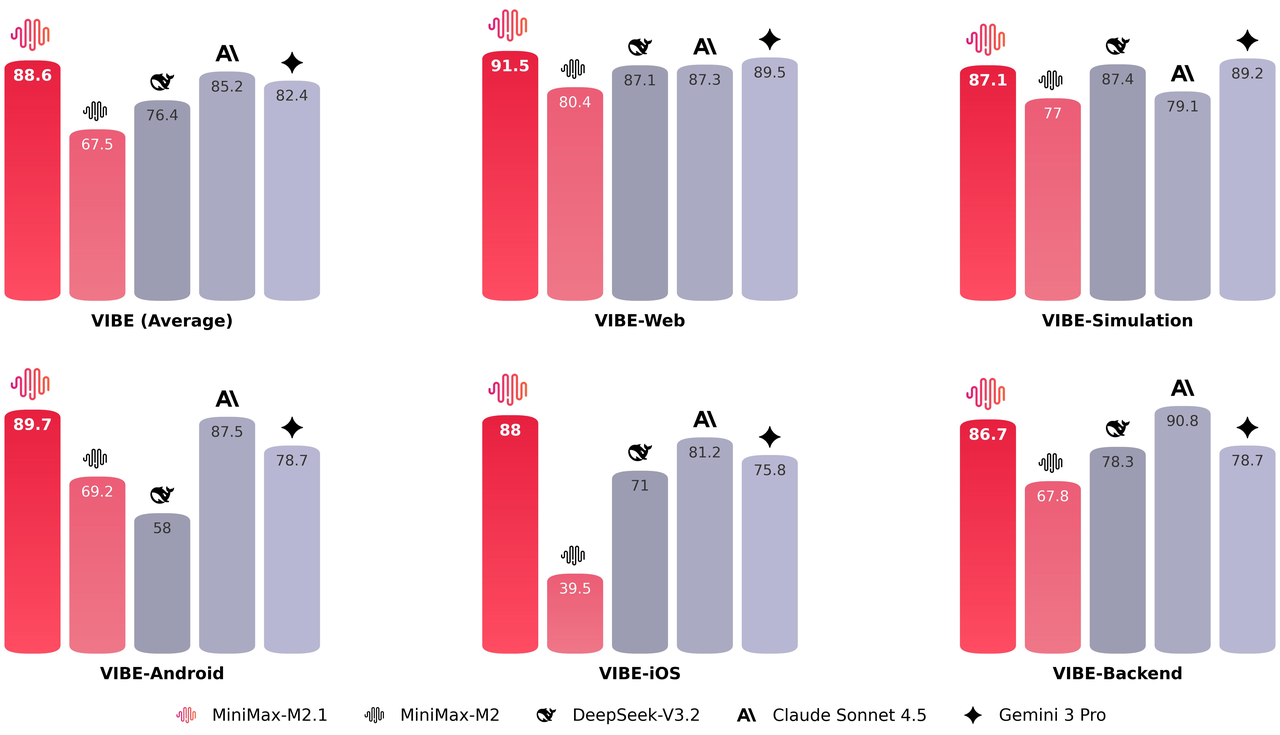

At the core of VIBE is a new verification paradigm we call Agent-as-a-Verifier (AaaV).

VIBE uses a vision-enabled agent to act as an automated QA tester, rather than relying on hand-written rules or static tests. This agent interacts with model-generated applications directly, observing both their behavior and visual output.

Running inside a sandboxed environment, the agent performs end-to-end evaluation of each application (coming soon), from launch and functional interaction to layout, rendering, and overall visual presentation.

By shifting verification from predefined rules to agent-driven interaction, AaaV allows VIBE to evaluate applications in a way that more closely mirrors how real users experience software.

Running inside a sandboxed environment, the agent performs end-to-end evaluation of each application (coming soon), from launch and functional interaction to layout, rendering, and overall visual presentation.

By shifting verification from predefined rules to agent-driven interaction, AaaV allows VIBE to evaluate applications in a way that more closely mirrors how real users experience software.

The Three Evaluation Layers of VIBE Bench (1)

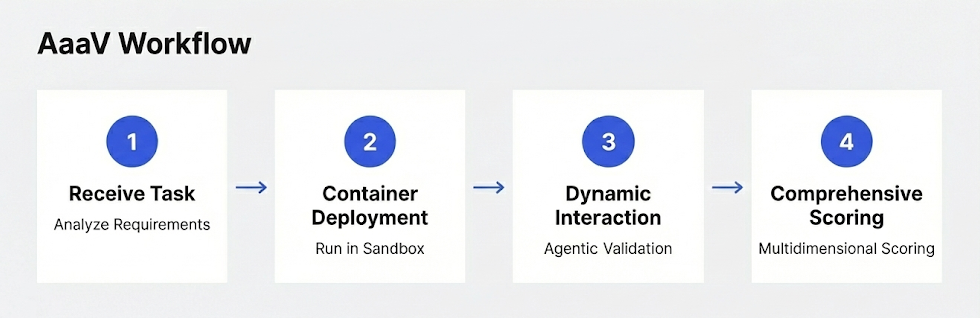

Execution Layer

The first question VIBE asks is a very basic one:

Can the application actually survive?

Can the application actually survive?

At the execution layer, we check whether the generated app can make it through the most fundamental hurdles:

- Does the project compile successfully

- Can the application be built without errors

- Does it launch and run without crashing

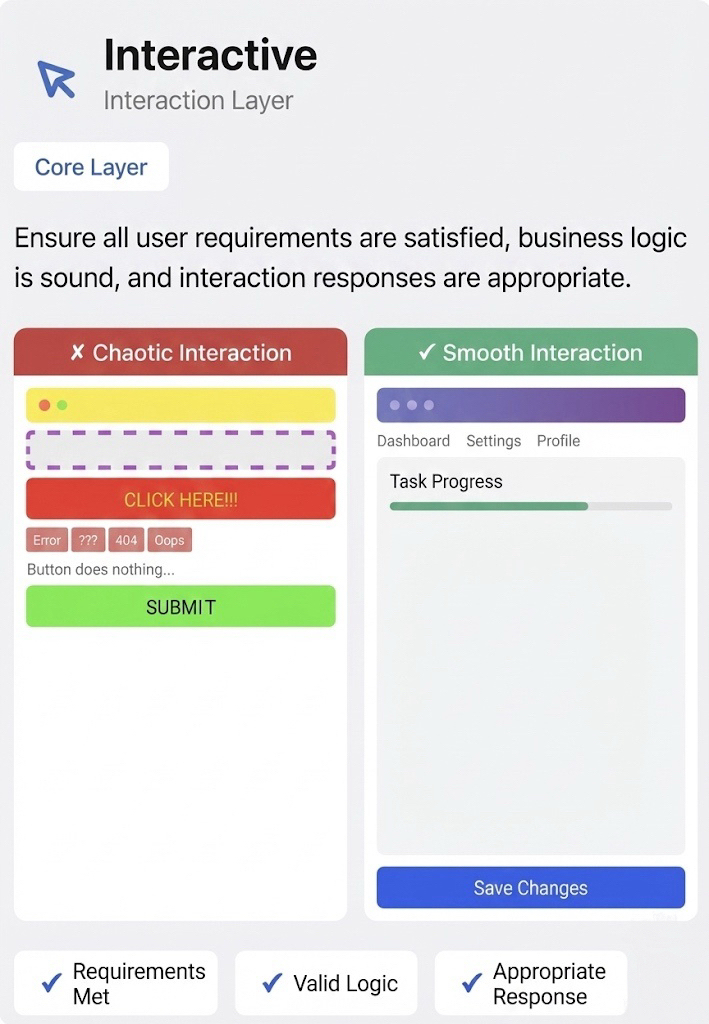

VIBE Bench's Three Evaluation Levels (2)

Interaction Layer Validates whether core functions are "usable"

- Whether interactions are responsive

- Whether business workflows can be completed end-to-end

- Whether key functions align with user intent

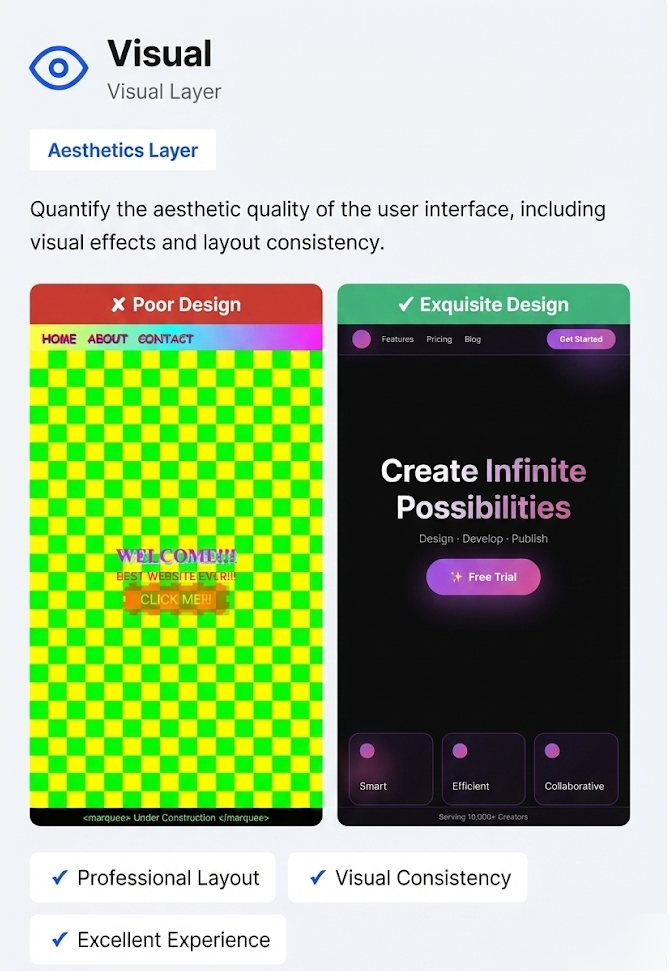

VIBE Bench's Three Evaluation Levels (3)

Visual & Aesthetics Layer Validates whether the interface has "production-grade presentation"

- Whether the layout is reasonable and professional

- Whether the visual hierarchy is clear

- Whether the color scheme is harmonious

- Whether the overall style complies with modern UI design standards

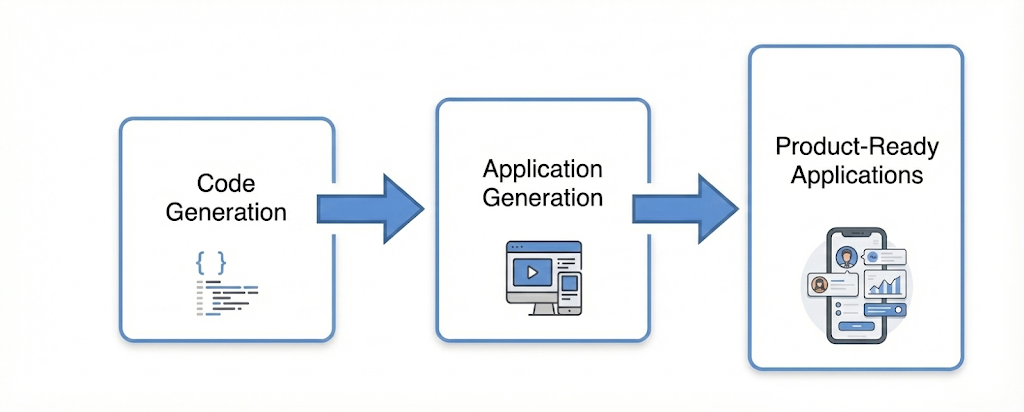

From "Is the code correct?" to "Is the application usable and deliverable?"

VIBE Bench reflects a critical phase transition in the evolution of model capabilities:

VIBE Bench provides a unified, scalable standard for evaluating and training full-stack generative models oriented toward real-world scenarios, committed to advancing model capabilities from "technical correctness" to "practical deployment value."

https://huggingface.co/datasets/MiniMaxAI/VIBE/blob/main/README.md?code=true

https://huggingface.co/datasets/MiniMaxAI/VIBE/blob/main/README.md?code=true